A/B Testing

Do You Know What Form Fields Visitors Are Stuck On?

One of the most common tools in conversion rate optimization is to improve a page’s performance by creating two different pages with a common goal and testing them against each other. It is not uncommon to see conversion improvements of a couple hundred percent or more.

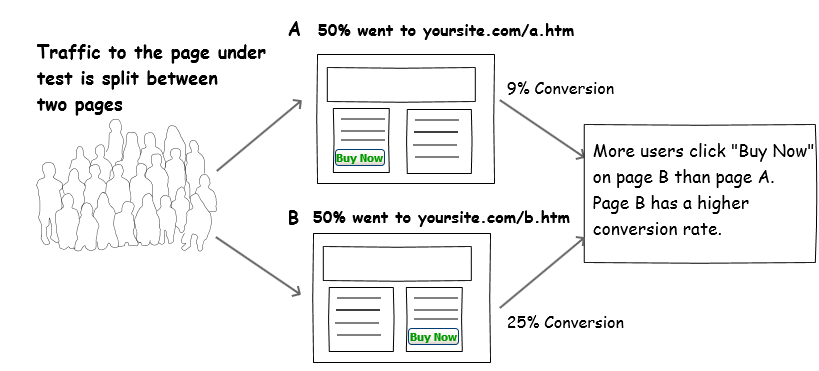

The simplest way to compare two pages is to do an A/B test, also known as split testing. A/B testing compares the results of two pages against a common goal. Fifty percent of the visitors are sent to page A; the others are sent to page B. The difference between the two pages can be as simple as a word of text or an image, or as complex as a completely different design or layout. Common goals are set to determine success between the pages. A goal can be clicking a text link or an image, downloading a file, or going to another page on the site.

A/B testing allows for the perfect experiment, because all variables are the same except for the changes made to each page. This eliminates unknown market variables, advertising, and seasonal variables that affect page tests done in serial (i.e., page A tested month one, page B tested month two).

The results of the test are graphed based on statistical significance (also known as statistical confidence). This provides statistical proof that enough visitors have converted per page to allow confidence that the result is accurate. The graph also allows quick analysis of the test, allowing determination of the test even when the results are too close to call.

We no longer provide A/B testing tools but do provide Conversion Rate Optimization A/B Testing and Consulting.